Server-side rendering (SSR) is gaining an increasingly significant foothold in the ever-evolving front-end landscape. Most frameworks now offer their own SSR-oriented meta variants – such as Next.js for React, Nuxt for Vue, and SvelteKit for Svelte. The Angular community has developed Analog.js to fill that gap but native solution was somewhat left behind for a time. However, recent versions indicate that the Angular team has renewed its focus on this area, introducing new features, better performance, and more advanced capabilities. SSR can be a powerful addition to your toolkit, so let’s dive in and explore how it works with Angular.

To explore this topic with practical examples I created a simple, e-commerce demo application. If you want to play around with SSR by yourself, you can find it here.

Looking for a production-ready Angular SSR application? Feel free to check out the source code of angular.love.

How does it work?

To better understand how SSR works, let’s first remind ourselves of the process of displaying a standard client-side rendered application to the end user:

- Browser downloads HTML file (almost empty shell with well-known app-root element) along with stylesheets, assets, and JS code from the server.

- Scripts are parsed and interpreted: Angular’s logic instructs the browser on how to construct the DOM structure using the application’s components.

- Usually, what appears on the screen depends on data fetched from APIs, so the browser makes HTTP requests and updates the UI accordingly.

- Once the data is loaded and the DOM is entirely constructed, the application is fully rendered and ready to use.

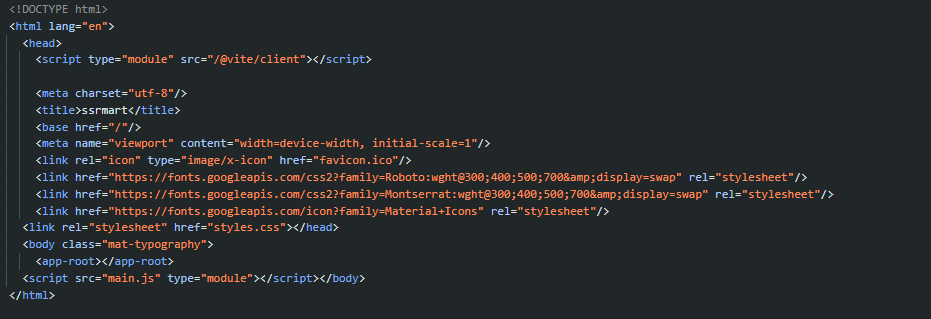

Here is what the browser receives from the server with a client-side rendering demo application:

Now let’s break down the process of displaying server-side rendered application:

- The browser downloads the HTML, stylesheets, and assets but unlike with client-side rendering, this HTML is already contentful and fully rendered.

- The browser then downloads, parses, and executes JS scripts.

- Angular takes control of the DOM and makes the page interactive. This process is known as hydration.

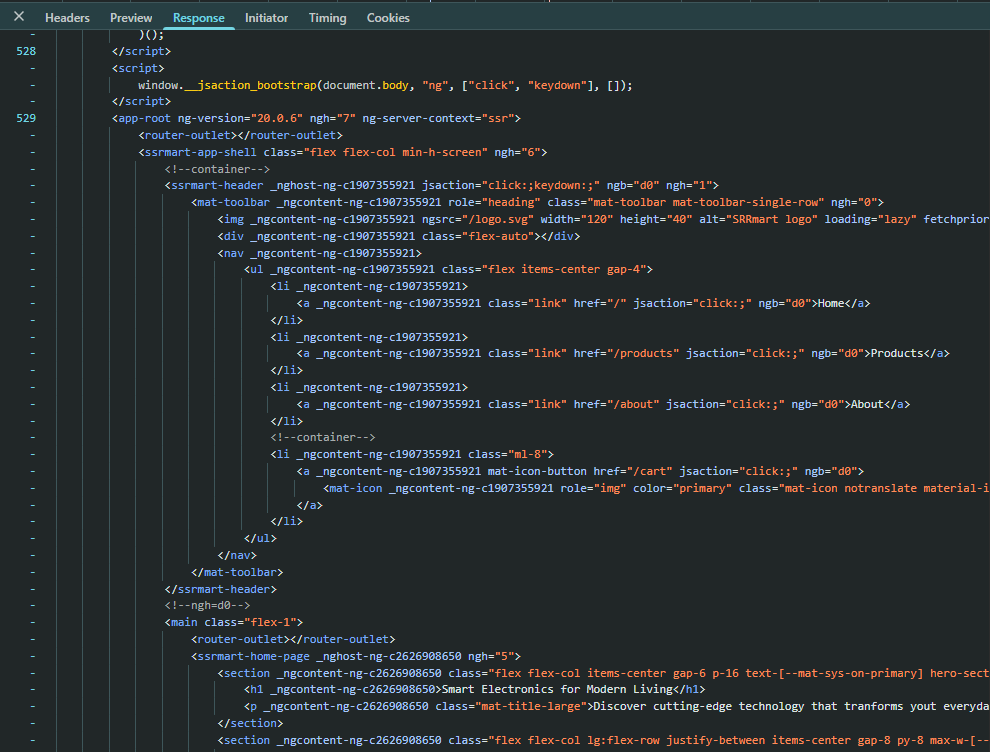

In SSR, the HTML is rendered ahead of time on the server and already includes all content when it reaches the browser:

and see how it looks like in the Preview tab:

How to start working with Angular SSR?

Since version 17 Angular CLI, running the ng new command prompts you to enable Server-Side Rendering (SSR) and Static Site Generation (SSG) for newly created applications. Alternatively, you can enable SSR right away by passing the flag: ng new –ssr.

If you want to add SSR support to an existing project, you can run the ng add @angular/ssr command.

After enabling SSR, Angular CLI generates a few additional files. Here’s a quick overview of their roles:

- server.ts – the main server entry point that bootstraps your Angular application on the server side. It sets up the Express server configuration for handling requests and rendering pages.

- app.config.server.ts – defines server-only providers and configurations needed for SSR functionality

- app.routes.server.ts – specifies the render mode for application’s routes

- main.server.ts – serves as the server bootstrap file, similar to main.ts but specifically for server-side rendering initialization

In addition, your angular.json (or project.json, if you’re using Nx) gets extended configuration sections for server builds.

Congratulations: your app is now running with SSR enabled, and you can start taking advantage of everything it offers. Speaking of which…

Why do we use Angular SSR?

When setting up Angular SSR, the server sends the initial HTML already rendered, either at build time or dynamically when the user makes a request. However, the delivered JavaScript scripts still contain Angular’s logic, which takes control of the page and handles all further interactions in the browser. From that point onward, it behaves like any standard Angular application.

You might wonder why this extra step is necessary and why we introduce additional complexity. Here are some key reasons:

Performance

Parsing and executing JavaScript is one of the most resource-intensive tasks a browser must handle. In a client-side rendered application, the browser initially receives an almost empty HTML file (so the user sees a blank screen) and must construct all the content from scratch. You can observe this process using the Performance tab in Chrome DevTools.

In addition to this expensive process, there’s also the time required to fetch the scripts from the server, which depends heavily on network conditions. When you factor in mobile devices, where hardware limitations and slower connections are common, performance often degrades even further.

The longer it takes for your application to become visible and usable, the greater the chance users will lose interest. According to Google research, as page load time increases from 1 to 3 seconds, the probability of a user leaving the page increases by 32%. That represents potential customers your business might lose.

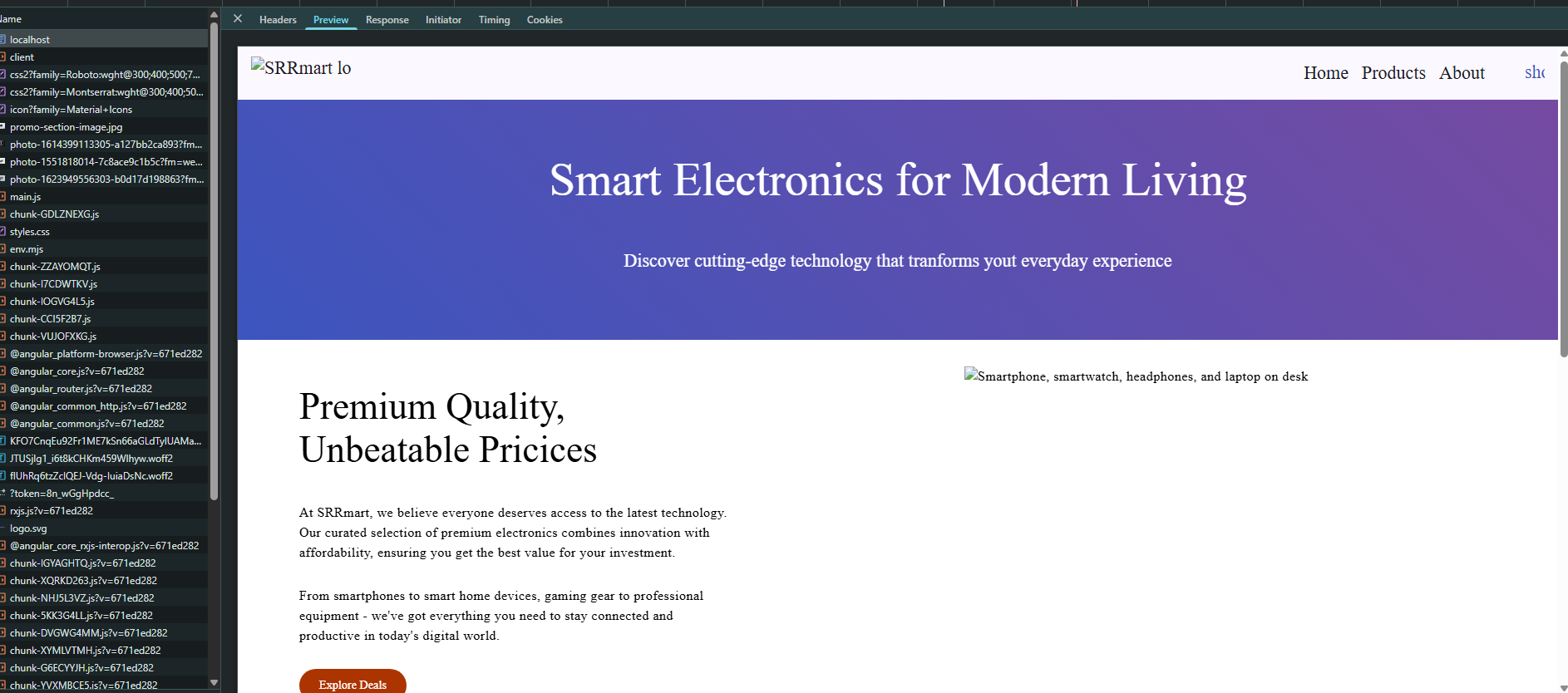

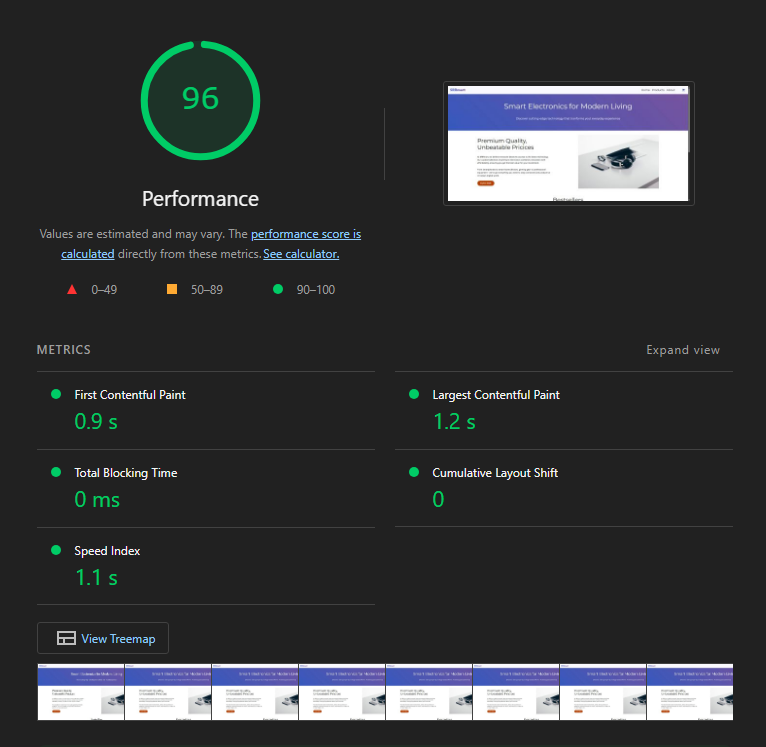

One of the best ways to audit your application performance is Lighthouse – an automated tool that runs a series of tests against a webpage and generates a comprehensive report with scores for each category along with recommendations for improvements.

For performance audits, Lighthouse evaluates several key metrics, including:

- First Contentful Paint (FCP) – time until first text/image appears (target: <1.8s)

- Largest Contentful Paint (LCP) – time until the largest visible element load (target: <2.5s)

- Total Blocking Time (TBT) – time main thread is blocked during loading (target: <0.15s)

- Cumulative Layout Shift (CLS) – visual stability score measuring unexpected layout shifts (target: <0.1)

- Speed Index (SI) – how quickly page content is visually populated (target: <1.3s)

This is a Lighthouse score for a demo app running as a CSR application:

This is how scoring improves when the application is run with SSR (Server-Side Rendering). The difference is noticeable, even with very simple applications. As your application becomes more complex, this improvement becomes more and more significant.

How using SSR skyrockets your Lighthouse score:

- Faster First Contentful Paint (FCP):

The server sends fully rendered HTML, so there’s no need to wait for JavaScript to download, parse, and execute before displaying content. As a result, visible content appears almost immediately. - Improved Largest Contentful Paint (LCP):

Critical and large content is pre-rendered on the server, allowing it to appear much faster than in a client-rendered approach. - Reduced Total Blocking Time (TBT):

Hydration is significantly lighter than full client-side rendering. The initial HTML is parsed without blocking, and JavaScript execution is deferred and incremental. This keeps the main thread unblocked and ensures the app becomes usable more quickly. - Better Cumulative Layout Shift (CLS):

The complete HTML structure, including images and content blocks, is sent with correct dimensions. This prevents layout shifts typically caused by late component mounting, or delayed content loading. - Faster Speed Index (SI):

Content appears immediately and is progressively enhanced rather than rendering in large, delayed chunks. This leads to faster visual completion and a smoother experience for users.

Search Engine Optimization (SEO)

SEO is the practice of improving a website’s visibility and its position in search results by making it more attractive and accessible to search engines. As search engines serve as a gateway to information, if your website doesn’t appear at the top of results, you are missing out on the majority of potential users who might be interested in your content, products, or services.

Search engine bots struggle with JS-heavy, client-rendered pages where all the content is dynamically loaded after initial HTML is served. Even if your application is greatly optimized and runs immediately for the human eye, it might not be fast enough for the bot which sees only the root element and a couple of script references. While Google’s search engine has improved over years to index JS-based content, this process is slow. Other major search engines are even more limited in this regard. That’s why it’s important to serve rich and content-filled HTML in the initial response.

Google uses Core Web Vitals as ranking factor, so higher scores for FCP, LCP, and CLS directly improve search visibility. Its mobile-first indexing approach favors pages that load quickly and provide a stable layout on mobile devices, making the benefits of SSR even more significant in this context.

The speed and efficiency of the indexing process also matters. Using SSR eliminates this bottleneck, providing discoverable content in the initial response. This results in faster indexing cycles, better content freshness score, and improved visibility, especially for rapidly changing content.

SSR ensures the complete anatomy of the website is visible to search engines. Internal linking, navigation hierarchy, and breadcrumbs are all present, helping to better understand the structure and internal relationships.

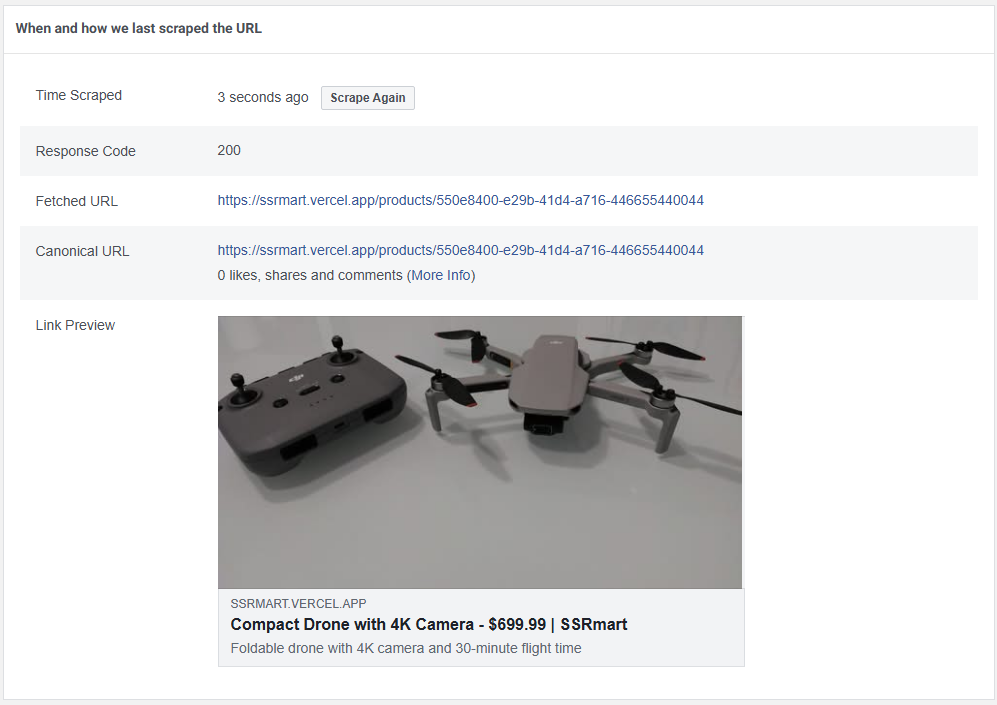

Metadata and Social Media Optimization

Metadata provides essential information about a website’s content, such as title, description, image, or keyword even before the page is fully loaded. While CRS applications typically ship with generic information that doesn’t reflect actual content to be dynamically generated, the SSR application can define accurate metadata based on the actual content of the page in the initial response.

Browsers use metadata to enhance the user experience. For example, the page title appears in browser tabs, bookmarks, and history entries, while the meta description influences how the page appears in search engine results.

Social media platforms also rely on metadata. Their crawlers fetch the shared URL, parse the HTML response, and search for specific metadata in the <head> element to generate rich link previews. However, these crawlers generally cannot execute JavaScript, so metadata must be present in the initial server response. High-quality and accurate previews improve engagement rates, as users are more likely to click on links that offer a clear and attractive summary of what they’ll find.

Open Graph tags, originally developed by Facebook but now widely adopted across platforms like LinkedIn, WhatsApp, and Slack, use properties like og:title, og:description, og:image, and og:url to define how content should be displayed in social feeds.

Twitter Cards work similarly but use Twitter-specific tags like twitter:card, twitter:title, and twitter:image to optimize content specifically for Twitter’s platform, offering different card types, such as summary cards, large image cards, and app cards.

Beyond social media, these tags are increasingly used by messaging apps, email clients, and other platforms that generate link previews, making them essential for content marketing and brand consistency across the web’s social ecosystem.

A canonical URL is a meta tag that tells search engines which version of a webpage should be considered the „official” or primary version when multiple URLs contain identical or very similar content. It helps solve duplicate content issues that can harm search engine rankings, such as when the same page is accessible through different URLs. By consolidating the SEO value to a single preferred URL, canonical tags help maintain search ranking authority, prevent keyword cannibalization, and ensure that search engines index and display the intended version of each page.

To address this challenge I prepared a simple SEO service to update metadata.

@Injectable({ providedIn: 'root' })

export class SeoService {

private readonly _titleService = inject(Title);

private readonly _metaService = inject(Meta);

private readonly _document = inject(DOCUMENT);

setSeoData(seoData: SeoData): void {

const title = seoData.title ? `${seoData.title} | SSRmart` : 'SSRmart';

this._titleService.setTitle(title);

this._updateMetaTag('og:title', title);

this._updateMetaTag('og:description', seoData.description);

this._updateMetaTag('og:image', this._getImageParamsUrl(seoData.imageUrl));

this._updateMetaTag('og:url', seoData.url);

this._updateMetaTag('og:type', seoData.type);

this._updateMetaTag('twitter:card', 'summary_large_image');

this._updateMetaTag('twitter:title', title);

this._updateMetaTag('twitter:description', seoData.description);

this._updateMetaTag(

'twitter:image',

this._getImageParamsUrl(seoData.imageUrl)

);

this._updateMetaTag(

'robots',

seoData.noIndex ? 'noindex,nofollow' : 'index,follow'

);

this._updateCanonicalUrl(seoData.url);

}

private _updateMetaTag(name: string, content?: string): void {

if (!content) {

this._metaService.removeTag(`property="${name}"`);

return;

}

if (this._metaService.getTag(`property="${name}"`)) {

this._metaService.updateTag({ property: name, content });

} else {

this._metaService.addTag({ property: name, content });

}

}

private _updateCanonicalUrl(url?: string): void {

const existingCanonicalUrl = this._document.querySelector(

'link[rel="canonical"]'

);

if (existingCanonicalUrl) existingCanonicalUrl.remove();

if (url) {

const canonicalLink = this._document.createElement('link');

canonicalLink.rel = 'canonical';

canonicalLink.href = url;

this._document.head.appendChild(canonicalLink);

}

}

private _getImageParamsUrl(imageUrl?: string): string | undefined {

if (!imageUrl) return undefined;

/*

Open Graph image requirements:

- size: 1200x630

- format: jpg or png

*/

const url = new URL(imageUrl);

url.search = '';

url.searchParams.set('w', '1200');

url.searchParams.set('fm', 'jpg');

url.searchParams.set('fit', 'crop');

return url.toString();

}

}

This service is then populated by SeoData, which you can configure using route resolvers. It can be a simple mapping of fetched properties, for example, on a product details page, or you can make it much more granular, using as many properties as needed, such as for the product search results page:

const getTitle = (

category: string,

searchTerm: string,

isBestSeller: boolean

): string => {

if (categoryTypeGuard(category)) {

return searchTerm

? `Search Results for "${searchTerm}" in ${capitalize(category)}`

: `${capitalize(category)} Products`;

}

if (searchTerm) return `Search Results for "${searchTerm}"`;

if (isBestSeller) return 'Best Selling Products';

return 'Products';

};

const getDescription = (

category: string,

searchTerm: string,

isBestSeller: boolean

): string => {

if (searchTerm) return `Find the best products matching "${searchTerm}".`;

if (categoryTypeGuard(category))

return `Discover amazing ${category} products at great prices.`;

if (isBestSeller) return 'Shop our most popular and best-selling products.';

return 'Browse our wide selection of products.';

};

export const productSearchSeoResolver: ResolveFn<SeoData> = (route) => {

const category = route.params['category'];

const searchTerm = route.queryParams['term'];

const isBestSeller = route.queryParams['bestsellers'];

const baseUrl = inject(ConfigService).get('baseUrl');

const url = category

? `${baseUrl}/products/${category}`

: `${baseUrl}/products`;

const imageUrl =

'https://images.unsplash.com/photo-1498049794561-7780e7231661';

return {

title: getTitle(category, searchTerm, Boolean(isBestSeller)),

description: getDescription(category, searchTerm, Boolean(isBestSeller)),

keywords: ['products', 'shop', 'online store', category, searchTerm].filter(

Boolean

),

type: 'website',

url,

imageUrl,

};

};

To verify how the metadata-powered previews work, you can use various tools like Facebook Sharing Debugger. It shows the preview that would appear when a link is shared, lists the metadata detected, and warns about any missing information. Below is a result for one of the product pages:

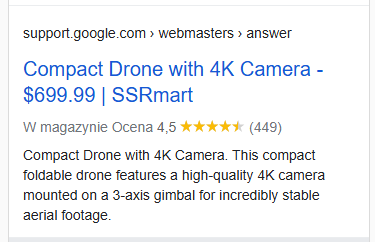

Structured Data with JSON-LD

This is another method of adding semantic markup to web pages that helps search engines understand and categorize content more effectively, often leading to enhanced search results with rich snippets. It uses a separate script block in the page head so it can be easily implemented without cluttering the page structure. Following Schema.org standards, JSON-LD (JavaScript Object Notation for Linked Data) can describe various content types using standardized properties like @type, name, description, image, rating, and price (using e-commerce app as playground). JSON-LD has become the preferred method for implementing structured data due to its flexibility, ease of maintenance, and Google’s explicit recommendation for its use.

This is a utility function that generates a product’s structured data based on its configuration, which is fetched through a resolver:

export const generateProductStructuredData = (

product: Product,

baseUrl: string

): StructuredData => {

return {

'@context': 'https://schema.org/',

'@type': 'Product',

'@id': `${baseUrl}/products/${product.id}`,

name: product.name,

description: product.shortDescription,

image: product.imageUrl,

sku: product.id,

category: product.category,

keywords: product.keywords.join(', '),

aggregateRating: {

'@type': 'AggregateRating',

ratingValue: product.rating,

ratingCount: product.ratingCount,

bestRating: '5',

worstRating: '1',

},

offers: {

'@type': 'Offer',

url: `${baseUrl}/products/${product.id}`,

priceCurrency: 'USD',

price: product.price,

itemCondition: 'https://schema.org/NewCondition',

availability: 'https://schema.org/InStock',

seller: {

'@type': 'Organization',

name: 'SSRMart',

url: baseUrl,

},

},

brand: {

'@type': 'Brand',

name: 'SSRMart',

},

additionalProperty: [

{

'@type': 'PropertyValue',

name: 'Best Seller',

value: product.isBestSeller,

},

],

};

};

The resulting data is then passed to the StructuredDataService, which is responsible for creating a script element in the document’s head:

@Injectable({ providedIn: 'root' })

export class StructuredDataService {

private readonly _document = inject(DOCUMENT);

addStructuredData(data: StructuredData, id: StructuredDataId): void {

const script = this._document.createElement('script');

script.type = 'application/ld+json';

script.textContent = JSON.stringify(data);

script.id = this._transformId(id);

this.removeStructuredData(id);

this._document.head.appendChild(script);

}

removeStructuredData(id: string): void {

const script = this._document.getElementById(this._transformId(id));

if (script) {

script.remove();

}

}

private _transformId(id: string): string {

return `${id}-structured-data`;

}

}

To verify the results, I use the Rich Results Test tool. Google relies on structured data to understand the content of a page and display it with a richer appearance in search results. To make your site eligible to appear as one of these rich results, follow this guide.

Page indexing

Page indexing is the process by which search engines discover, analyze, and store web pages in their databases so they can be retrieved and displayed in search results when users make relevant queries.

Sitemap

A sitemap provides search engines with a roadmap of a website’s content so they can be discovered, crawled, and indexed more effectively. It’s usually a XML markup defining metadata like:

- location – canonical url of page location

- last modification date

- how frequently the content changes – it can be daily, weekly, monthly, etc.

- priority – relative importance of pages within a website

export const sitemapRoute = (router: Router): void => {

router.get('/sitemap.xml', async (req, res) => {

const { baseUrl } = getServerConfig();

const sitemapItems = await getSitemapItems(baseUrl);

const xml = `<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

${sitemapItems

.map(

(item) => `

<url>

<loc>${item.loc}</loc>

<lastmod>${item.lastmod}</lastmod>

<changefreq>${item.changefreq}</changefreq>

<priority>${item.priority}</priority>

</url>`

)

.join('')}

</urlset>`;

res.set('Content-Type', 'application/xml');

res.set('Cache-Control', 'public, max-age=86400, s-maxage=86400');

res.send(xml);

});

};

It helps with indexation speed so published or modified content can be discovered and processed quicker by search engines.

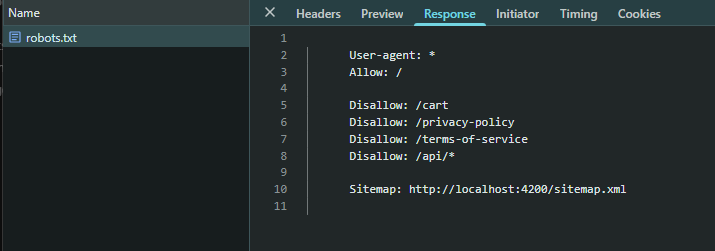

For optimal SEO impact, sitemaps should only include canonical URLs and pages you want indexed, excluding duplicate content or pages blocked by robots.txt.

Robots.txt

Robots.txt is a text file placed in the website’s root directory, with instructions to search crawlers about which parts of the application they should or shouldn’t access. It uses simple commands like:

- User-agent – defines which crawler the rules apply to

- Disallow – blocks access to specific pages or directories

- Allow – permits access to content

It helps to manage the crawl budget by preventing search engines from spending it for unimportant pages, duplicate content or staging environments, and provides content control by blocking access to sensitive areas.

Meta robots

You can explicitly instruct search engines not to include a specific page in their search results even if they can crawl and access the page content, by defining a noindex robot meta tag. This means search engines can still follow links from noindex pages to discover other content, but the noindex page itself won’t appear in search results. It helps improve site quality, by preventing low-value pages from competing with your important content in search results.

Rendering modes

As mentioned above, app.routes.server.ts file contains the configuration for server routes. Its main purpose is to define the render mode for routes in the application. Angular offers three rendering modes:

- Server (SSR) – server renders a page for each request and sends fully populated HTML back to the browser

- Prerender (SSG) – route is prerendered at build time so static HTML file can be served

- Client (CSR) – renders page in the browser (a default Angular behavior)

export const serverRoutes: ServerRoute[] = [

{

path: '',

renderMode: RenderMode.Server,

},

{

path: 'products',

renderMode: RenderMode.Server,

},

{

path: 'privacy-policy',

renderMode: RenderMode.Prerender,

},

...,

{

path: '**',

renderMode: RenderMode.Server,

},

];

Each rendering mode has its own pros and cons and should be chosen based on specific needs of your application. Let’s explore each one of them.

Server-side rendering

As mentioned earlier in this article, with Server-Side Rendering (SSR), the server sends a fully rendered HTML document directly to the browser. This means the browser doesn’t have to wait for JavaScript to download and execute before showing the content. Instead, the user immediately receives a ready-to-display page, along with all the necessary data. This approach not only improves the perceived performance for users but is also great for SEO, since search engines can easily crawl and index the fully rendered HTML content without depending on JavaScript execution.

If you decide to use SSR, keep in mind that your code can’t depend on browser-specific APIs. Using global objects such as window, document, navigator or location and some properties of HTMLElement will cause runtime errors. Instead, use SSR-friendly abstractions provided by Angular. For example, using the DOCUMENT injection token in a service like SeoService instead of accessing document directly. This ensures your code runs safely, both on the server and in the browser.

@Injectable({ providedIn: 'root' })

export class SeoService {

private readonly _titleService = inject(Title);

private readonly _metaService = inject(Meta);

private readonly _document = inject(DOCUMENT);

…

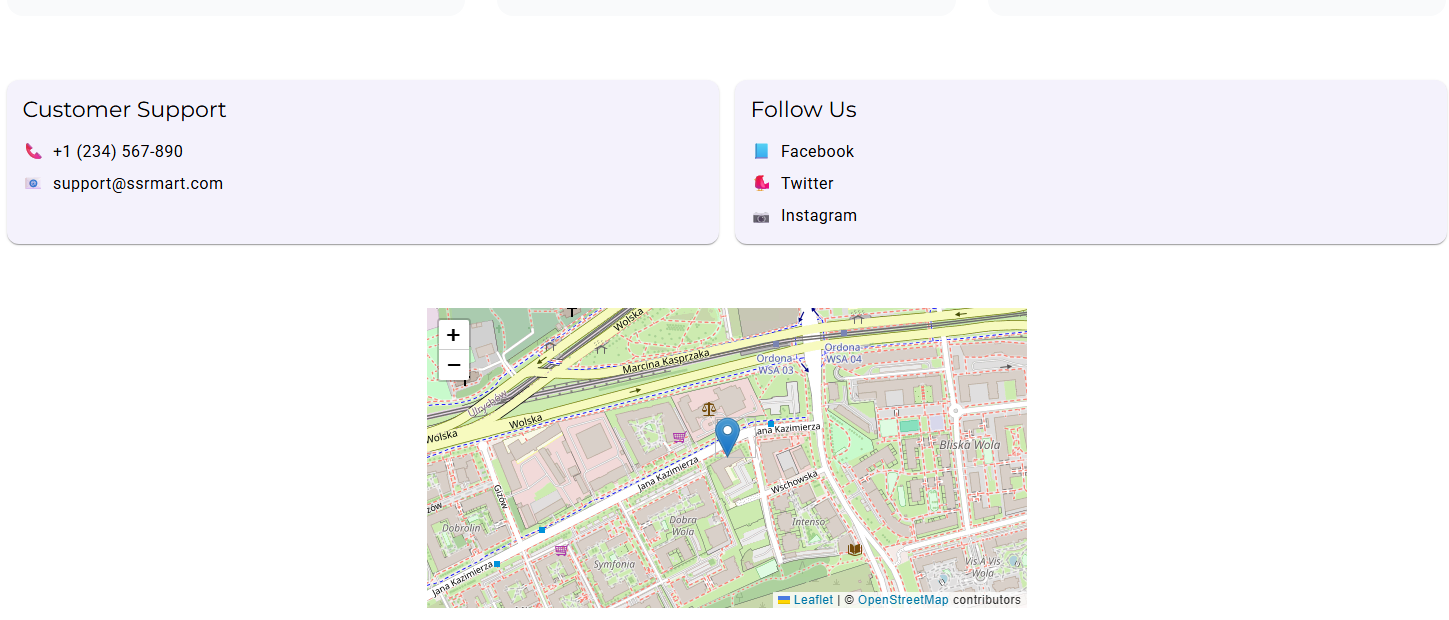

}Another limitation to keep in mind comes when choosing third-party libraries. Always make sure they are SSR-compatible and don’t rely on the browser under the hood. In the demo application, the “About” page displays a map with the shop localization using LeafletJS, which is a great example of a library using DOM manipulation.

If you need access to browser-only APIs, make sure to restrict its execution to the browser. You can do this by wrapping the code inside the afterNextRender or afterEveryRender lifecycle hooks, as they are only run in the browser, and are skipped on the server.

export class AboutPageComponent {

private readonly _seoService = inject(SeoService);

constructor() {

this._seoService.setSeoData(getAboutPageSeo());

afterNextRender(async () => {

await this._initializeMap();

});

}

private async _initializeMap(): Promise<void> {

const lat = 52.225996;

const lng = 20.949808;

const zoom = 16;

try {

const L = await import('leaflet');

const map = L.map('map').setView([lat, lng], zoom);

L.marker([lat, lng]).addTo(map);

L.tileLayer('https://{s}.tile.openstreetmap.org/{z}/{x}/{y}.png', {

attribution:

'© <a href="https://www.openstreetmap.org/copyright">OpenStreetMap</a> contributors',

}).addTo(map);

} catch (error) {

console.error('Failed to initialize map:', error);

}

}

}If you need to perform this kind of check outside of a component or directive, where lifecycle hooks aren’t available, you can inject the PLATFORM_ID token. With it, you can use the isPlatformBrowser utility to run code only in the browser (or isPlatformServer if you want code to execute strictly on the server).

export const initializeMap = async (containerId: string): Promise<void> => {

const platformId = inject(PLATFORM_ID);

if (!isPlatformBrowser(platformId)) return;

const lat = 52.225996;

const lng = 20.949808;

const zoom = 16;

try {

const L = await import('leaflet');

const map = L.map(containerId).setView([lat, lng], zoom);

L.marker([lat, lng]).addTo(map);

} catch (error) {

console.error('Failed to initialize map:', error);

}

};I decided to use this rendering mode for the home and product search. Since these pages display product search results, which can change frequently, it made sense to render them on the server. This way, I get all the SEO benefits, to keep strong search rankings, while also ensuring customers always see the most up-to-date content. A win-win for both the user experience and the business outcomes.

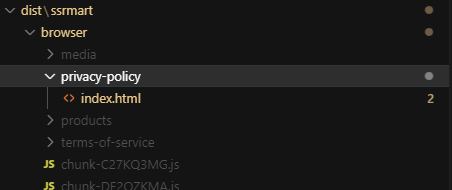

Prerendering (SSG)

In prerendering mode, the HTML document is generated at build time. This makes pages load even faster, since the server can respond directly with a static file, without doing any extra work.

Another big advantage is caching. Static files can be efficiently cached by Content Delivery Networks (CDNs), browsers, and other caching layers, which leads to even faster subsequent page loads. In fact, a fully static site can be deployed entirely through a CDN or a simple static file server, removing the need to maintain a custom server runtime for your application.

To see the result of this process, build your application and then open the dist folder:

Just like SSR, prerendering provides a significant boost for SEO, since search engines receive a fully rendered HTML. The same limitation applies here: you need to avoid using browser-specific APIs directly.

One additional constraint is that all the data required for rendering must be available at build time. This means prerendered pages can’t depend on user-specific data or anything that changes per request. Because of this, prerendering is best suited for pages that are identical for all users.

Because prerendering happens at build time, it can noticeably increase the duration of your production builds. Generating a large number of HTML documents not only extends build times but can also significantly increase the total size of your deployment package. As a result, deployments may become slower and require more storage or bandwidth.

Customizing prerendering

To prerender documents for parameterized routes, you can define an asynchronous getPrerenderParams function. This function returns an array of objects, where each object is a key-value map of route parameter names to their values.

Inside this function, you can use Angular’s inject function to access dependencies and perform any required work to determine which routes should be prerendered. A common approach is to make API calls to fetch the data needed to construct the array of parameter values.

{

path: 'products/:id',

renderMode: RenderMode.Prerender,

getPrerenderParams: async () => {

const productService = inject(ProductService);

const products = await firstValueFrom(productService.searchProducts(), {

defaultValue: [],

});

return products.map((product) => ({ id: product.id }));

},

},

By using the fallback property, you can define a strategy for handling requests to routes that were not prerendered. The available options are:

- Server – falls back to server-side rendering (default)

- Client – falls back to client-side rendering

- None – a request won’t be handled

Client-side rendering

This mode takes us back to the default Angular behavior. It’s the simplest approach, since you can write code as if it will always run in a web browser and freely use a wide range of client-side libraries.

The trade-off, however, is that you lose all the benefits of SSR, which impacts the performance and SEO negatively.

On the other hand, the server doesn’t need to do any extra work beyond serving the static JavaScript assets. This can be an advantage if server costs are a concern, especially for pages where SSR provides little to no value, such as an admin panel.

Hydration

According to Angular’s documentation:

Hydration is the process that restores the server-side rendered application on the client. This includes things like reusing the server rendered DOM structures, persisting the application state, transferring application data that was retrieved already by the server, and other processes.

The term “hydration” is quite fitting. A helpful analogy is the resurrection plant. In its dried state under the desert sun, the plant resembles a static HTML delivered from the server: structurally complete in form and appearance, yet lifeless and unresponsive.

Adding water brings it back to life. In the same way, hydration in Angular brings interactivity to a page. The static but fully formed HTML is transformed into a dynamic, responsive application, while reusing all the work already done during server-side rendering.

Matching existing DOM elements at runtime and reusing them when possible eliminates unnecessary work of destroying and recreating nodes. This leads to an improved performance by reducing First Input Delay (FID) and Largest Contentful Paint (LCP).

Additionally, it prevents UI flickering and layout shifts, which improves the Cumulative Layout Shift (CLS) score. Higher scores across these metrics not only enhance user experience but also have a positive impact on SEO.

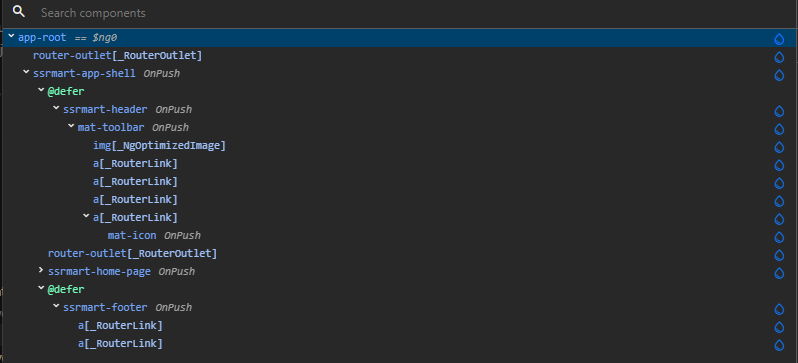

To verify hydration is enabled and it’s working properly, open Developer Tools. You should see a confirmation log in the console with hydration-related stats like:

Angular hydrated 5 component(s) and 65 node(s), 0 component(s) were skipped. 5 defer block(s) were configured to use incremental hydration.

You can also use the Angular DevTools browser extension. Look for droplet icons in the components tree or enable hydration overlay to confirm which parts of the page were hydrated.

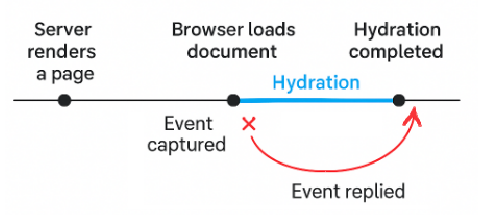

Replaying events

When a page is rendered on the server, it becomes visible to the user as soon as the browser loads the HTML document. At this stage, the page may look ready, but the application is not yet interactive. Hydration still needs to complete before Angular can fully attach its behavior to the DOM.

This can create a problem: what if a user tries to click a button, type in a field, or interact with the page before hydration is finished? Without a solution, those early interactions would simply be lost. This is where Event Replay comes in.

The Event Replay feature ensures that user interactions are preserved during the non-interactive phase. It works in three steps:

- Capture – any native browser events (such as clicks, key presses, or scrolls) triggered before hydration completes are intercepted

- Store – these captured events are temporarily stored in memory while Angular is still hydrating the application

- Replay – once hydration is finished and the application is fully interactive, Angular replays the stored events as if they had just happened

This feature is enabled using withEventReplay() function:

bootstrapApplication(App, {

providers: [

provideClientHydration(withEventReplay())

]

});

However, if you use incremental hydration, event replying is enabled by default.

Incremental hydration

Incremental hydration is an advanced technique that allows parts of an application to remain dehydrated and then become hydrated incrementally on demand, rather than all at once. This approach provides granular control over the process, improves performance by reducing the initial bundle size, and still delivers a user experience comparable to full hydration.

It leverages the familiar @defer block syntax, which defines a boundary for incremental hydration along with a hydrate trigger. During server-side rendering, Angular loads the @defer block’s content and renders it in place of a placeholder. On the client side, however, these dependencies remain deferred, and the content stays dehydrated until the hydrate trigger fires.

Hydration only occurs during the initial page load, when the content is rendered on the server. For subsequent page loads that are client-side rendered (for example, when a user navigates to a page using routerLink), Angular falls back to the standard @defer block behavior.

Once hydration is complete, all browser events (especially those matching component listeners) that were triggered before hydration are replayed using Angular’s Event Replay mechanism.

It’s worth remembering that a deferable view uses idle as the default trigger if no explicit one is specified. If you decide to use a different trigger for client-side deferring, you may also need to define @placeholder block.

@Component({

selector: 'ssrmart-app-shell',

template: `

@defer (hydrate on hover) {

<ssrmart-header />

}

<main class="flex-1">

<router-outlet />

</main>

@defer (hydrate on interaction; on immediate) {

<ssrmart-footer />

} @placeholder {

<footer class="footer-placeholder"></footer>

}

`,

changeDetection: ChangeDetectionStrategy.OnPush,

imports: [HeaderComponent, FooterComponent, RouterOutlet],

host: {

class: 'flex flex-col min-h-screen',

},

})

export default class AppShellComponent {}

For the purpose of this demo, I defined the hydration trigger as hover (though in practice, I would normally use interaction, like I did for the footer). As you can see, once I hover over the header, Angular hydrates it and lazy-loads the chunks for the HeaderComponent, MatToolbar, and MatIcon while the footer remains dehydrated.

The @defer block can define multiple hydration triggers, separated by a semicolon. Hydration happens if any of the triggers fires. At that point, Angular loads the deferable view’s dependencies and hydrates the content. The available triggers are as follows:

- on idle – once the browser reaches an idle state, as detected via requestIdleCallback

- on viewport – when content enters the viewport (detected using Intersection Observer API)

- on interaction – when the user interacts with an element through a click or keydown event

- on hover – when the user hovers over the content with a mouse (mouseover) or focuses it (focusin)

- on immediate – as soon as all other non-deferred content has finished rendering

- on timer – after a specified delay

- when condition – when a given condition evaluates to truthy

A special case is the never trigger, which tells Angular to keep the block dehydrated indefinitely, leaving it as static content. This is particularly useful for non-interactive or decorative sections that do not benefit from JavaScript activation. Additionally, never prevents hydration for all of its descendants, ensuring that no nested hydration triggers are executed.

Speaking of nested hydration triggers, Angular’s component system is hierarchical, which means that for a component to be hydrated, all of its parent components must be hydrated first. If hydration is triggered for a block nested inside a dehydrated structure, Angular begins hydration from the top-most dehydrated parent and proceeds downward to the triggered block, firing in that order.

To visualize this process, let’s wrap the router-outlet in the shell component in a defer block with the interaction hydration trigger. Then, for the product cards in the Bestsellers section on the home page, we use a @defer block with the hover trigger. The result looks like this:

Constraints

When Angular performs hydration, it takes the HTML that was rendered on the server and reuses it in the browser instead of rendering everything from scratch. For this process to succeed, the DOM structure on the server and the DOM in the browser must match exactly.

Avoid direct DOM manipulation

Hydration assumes Angular has full control over the DOM. If you manipulate the DOM directly, for example, by querying specific elements, manually appending or removing elements, or moving nodes around, Angular has no knowledge of these changes.

Because Angular is unaware of such modifications, it cannot resolve the differences when comparing the server-rendered DOM to the browser DOM during hydration.

Do not rely on environment-dependent rendering logic

The server environment differs fundamentally from the browser. Properties and APIs that exist in the browser – such as window, localStorage, device characteristics, user preferences, or any browser-specific state – are either unavailable or return different values on the server.

If your component renders different content based on these environment-specific variables, you create a mismatch between what the server renders and what the client expects during hydration.

Instead, maintain a consistent DOM structure and defer environment-specific behavior to CSS or post-hydration logic.

Ensure a valid HTML structure

Browsers are forgiving with invalid HTML. If your templates produce incorrect markup, the browser may silently „fix” it by closing unclosed tags, nesting elements differently, or discarding invalid nodes. While this correction might seem convenient, it creates a serious problem for hydration: the corrected browser DOM no longer matches the server-rendered DOM. This mismatch causes hydration to fail.

Make sure to write valid, well-formed HTML in Angular templates, and consider using validation tools or Angular’s strict template checks to catch errors early.

Whitespace preservation

Hydration does not only check elements. It also compares whitespace and comment nodes generated during server-side rendering. If whitespace is preserved on the server but handled differently in the browser, Angular detects a mismatch.

To avoid these problems, Angular recommends leaving the preserveWhitespaces option set to its default value of false. This ensures consistent output between the server and the client.

Custom or Noop Zone.js

Hydration relies on a signal from Zone.js indicating when the application has become stable. At this point, Angular can either begin the serialization process on the server or perform post-hydration cleanup on the client by removing any unclaimed DOM nodes. Using a custom or “noop” Zone.js implementation can affect the timing of the stable event. This configuration is not yet fully supported.

Skipping hydration

For the reasons mentioned above, hydration may not work with certain components. The recommended approach is to refactor those components to make them hydration-compatible. If refactoring proves too complex or time-consuming, there is a last-resort workaround: the ngSkipHydration attribute.

<hydration-incompatible-component ngSkipHydration />

or

@Component({

…,

host: { ngSkipHydration: ‘true’ }

})

export class HydrationIncompatibleComponent {}

When applied to a component’s host node, this attribute instructs Angular to skip hydration for that component and all of its descendants. As a result, they will be destroyed and re-rendered on the client.

Interactions with request and response

Requests and responses act as bridges between server-specific data and Angular’s components and services, forming the foundation of context-aware server-side rendering. To support this interaction, Angular provides dedicated dependency injection tokens.

Keep in mind that below injection tokens are null in following scenarios:

- during build process

- rendering application on client-side

- using static site generation (SSG)

- during route extraction in development

Request

The REQUEST token grants access to the current Request object from the Web API. This object exposes rich details about incoming requests, which can be useful for:

- Client profiling – device type, language, localization settings

- Security context – connection encryption, referrer domains, authentication headers

- Performance and SEO optimization – dynamic rendering strategies, caching adjustments

export const getCookie = (name: string): string => {

const document = inject(DOCUMENT);

const request = inject(REQUEST);

const platformId = inject(PLATFORM_ID);

const cookies = isPlatformServer(platformId)

? request?.headers.get('cookie') ?? ''

: document.cookie;

return cookies.match('(^|;)\\s*' + name + '\\s*=\\s*([^;]+)')?.pop() ?? '';

};

Request Context

While the REQUEST token delivers raw HTTP information, the REQUEST_CONTEXT token represents processed, application-specific intelligence. It acts as a repository for enhanced request understanding, combining raw request data with business logic, user profiles, and application state. This context can be passed as the second parameter of the handle function in server.ts.

app.use('/**', (req, res, next) => {

const enableCustomerChat =

!isBotUserAgent(req.headers['user-agent']) &&

isFeatureFlagEnabled('customer_chat');

angularApp

.handle(req, {

enableCustomerChat,

})

.then((response) =>

response ? writeResponseToNodeResponse(response, res) : next()

)

.catch(next);

});

Response

The RESPONSE_INIT token provides access to the response initialization options. This allows you to set headers and the status code for the response dynamically. Use this token whenever headers or status codes need to be determined at runtime.

export default class ProductNotFoundPageComponent {

private readonly _responseInit = inject(RESPONSE_INIT);

constructor() {

if (!this._responseInit) return;

this._responseInit.status = 404;

this._responseInit.headers = { 'Cache-Control': 'no-cache' };

}

}

However, if you already know which status code or headers should be included in the response, you can define them directly in the server routes.

{

path: 'products/not-found',

renderMode: RenderMode.Server,

headers: { 'Cache-Control': 'no-cache' },

status: 404,

},

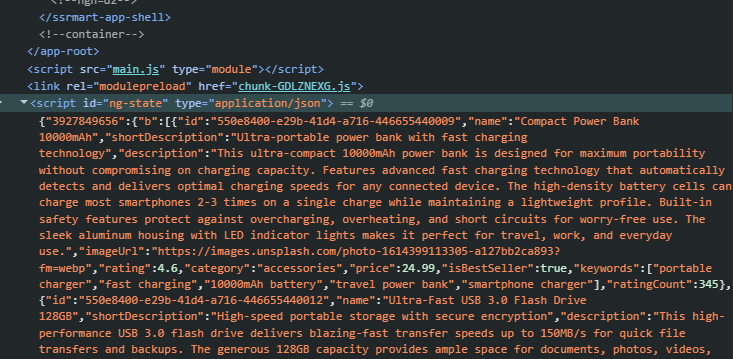

Http caching

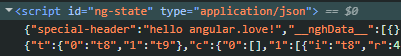

Angular caches requests made during server-side rendering (SSR) and reuses them during the initial client-side rendering. The responses to these requests are serialized and embedded in the server’s initial response, allowing Angular to use the cache until the application becomes stable.

Here’s an example of a cached search request for bestseller products displayed on the home page:

By default, only GET and HEAD requests that do not include Authorization or Proxy-Authorization headers are cached. This behavior can be customized using the withHttpTransferCacheOptions feature function of provideClientHydration. With it, you can:

- Include POST requests – particularly useful for search requests with many parameters

- Include requests with authorization headers – allowing secured data to benefit from caching

- Define a custom filter function – to determine which requests should be cached (my implementation relies on the HttpContextToken)

- Specify response headers – to be included in the JSON with the serialized response

provideClientHydration(

withHttpTransferCacheOptions({

includePostRequests: true,

includeRequestsWithAuthHeaders: true,

filter: (request) => !request.context.get(SKIP_HYDRATION_CACHE),

includeHeaders: ['Content-Type'],

})

),

This cache can be disabled by using:

provideClientHydration(

withNoHttpTransferCache()

),

Transfer State

To deliver cached responses, Angular uses Transfer State. It’s a key-value store that is passed from the server-side application to the client-side application.

The Transfer State is available as an injection token so you can use it to easily transfer data in special cases. The values in the store are serialized/deserialized using JSON.stringify and JSON.parse. So, only the primitives and non-class objects will be serialized and deserialized in a non-lossy manner.

const specialHeaderStateKey = makeStateKey<string>('special-header');

const trasnferSpecialHeader = (): void => {

const transferState = inject(TransferState);

const request = inject(REQUEST);

const platformId = inject(PLATFORM_ID);

if (isPlatformServer(platformId)) {

transferState.set(

specialHeaderStateKey,

request?.headers.get('x-special-header') ?? null

);

}

};

const readSpecialHeader = (removeFromTransferState = false): string | null => {

const transferState = inject(TransferState);

const value = transferState.get(specialHeaderStateKey, null);

if (removeFromTransferState) {

transferState.remove(specialHeaderStateKey);

}

return value;

};

Analog.js

I’ve already mentioned Analog.js – a really interesting solution in the Angular ecosystem. It’s a full-stack meta-framework that brings a modern developer-experience with features like:

-

- File-based routing – eliminates the need for manual route configuration. Simply create files in the src/app/pages directory, and Analog automatically generates routes based on the file structure

- API routes – allow you to define serverless functions directly within your Angular project. Place files in the src/server/routes directory, and Analog exposes them as API endpoints. This co-location of frontend and backend code simplifies full-stack development and deployment.

- Hybrid rendering – Analog supports server-side rendering out of the box but gives you flexible control over how each page is rendered. You can choose between SSR, SSG, or CSR on a per-route basis.

- Vite-powered build system – provides fast development server startup, hot module replacement (HMR) and production builds.

Analog.js is particularly well-suited for content-heavy applications, marketing sites, e-commerce platforms, and any project where SEO and initial page load performance are critical. It also gives you a more streamlined full-stack development experience.

The framework maintains full compatibility with the Angular ecosystem, meaning you can use existing Angular libraries, components, and tooling while benefiting from Analog’s capabilities.

Feel free to check out our article about Analog.js.

Conclusions

Angular SSR has come a long way from being a complex, experimental feature to something you can actually implement without losing your sanity. The performance gains are real: faster initial page loads, better SEO rankings, and happier users who don’t have to stare at loading spinners.

The key thing to remember is that SSR isn’t a magic bullet for every Angular app. If you’re building a dashboard that lives behind authentication, you probably don’t need it. But if SEO matters, and you’re serving content to the public, or if performance is critical for your business, SSR can make a meaningful difference. The hybrid approach is particularly smart – use SSR where it helps and stick with client-side rendering where it doesn’t.

Looking ahead, SSR is only getting better with improvements in edge computing, better caching strategies, and the Angular team’s continued focus on developer experience. If you’ve been on the fence about implementing SSR, now’s a good time to give it a shot.